HubSpot positions MCP as the bridge between CRMs and modern AI tools. A future where marketers and RevOps leaders ask everyday questions in plain English and instantly get answers grounded in their actual data.

The demos look smooth. The narrative is strong.

But none of that matters until you point these systems at a real HubSpot instance (the kind real-life marketing teams actually operate in).

So we did.

In a SOTA (State-Of-The-Art) comparison test, we connected both Claude Opus 4.5 and ChatGPT 5/5.1 to a live HubSpot environment through MCP.

This is the story of what unfolded.

Prefer watching over reading? Here’s the full SOTA comparison:

The Setup: Real Data, Real Workflows

To make the test meaningful, both models were connected to a HubSpot tenant that mirrors what you’d find in any scaled GTM operation:

- 140K+ contacts

- Lifecycle stages used inconsistently (like every real CRM)

- Original sources scattered across years

- Deals tied to multiple channels

- Data inherited from multiple owners and ops eras

- No helper tables or engineered join fields

If MCP is supposed to support real workflows, it needs to handle real data. That’s exactly what we gave it.

What We Asked: Core GTM Questions

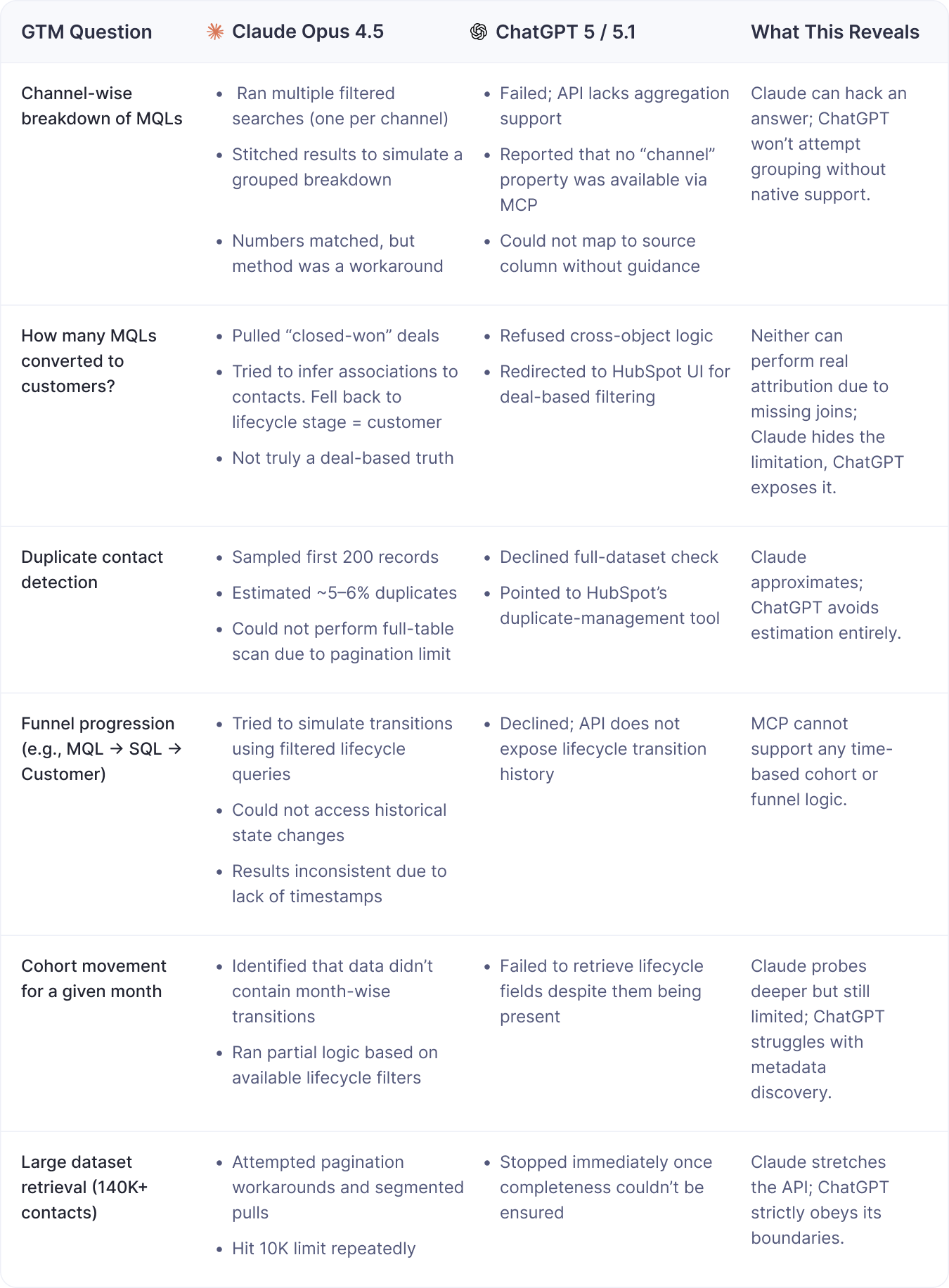

We didn’t ask anything exotic. These were basic, practical questions:

- Total contacts

- Total MQLs

- Channel-wise breakdown

- How many became customers

- Funnel movement

- Duplicate contacts

These are the questions every marketer, RevOps manager, and demand-gen lead asks weekly. They shouldn’t break anything. But they did.

How Claude and ChatGPT Performed on Real GTM Questions

The Real Culprit: HubSpot MCP Limitations

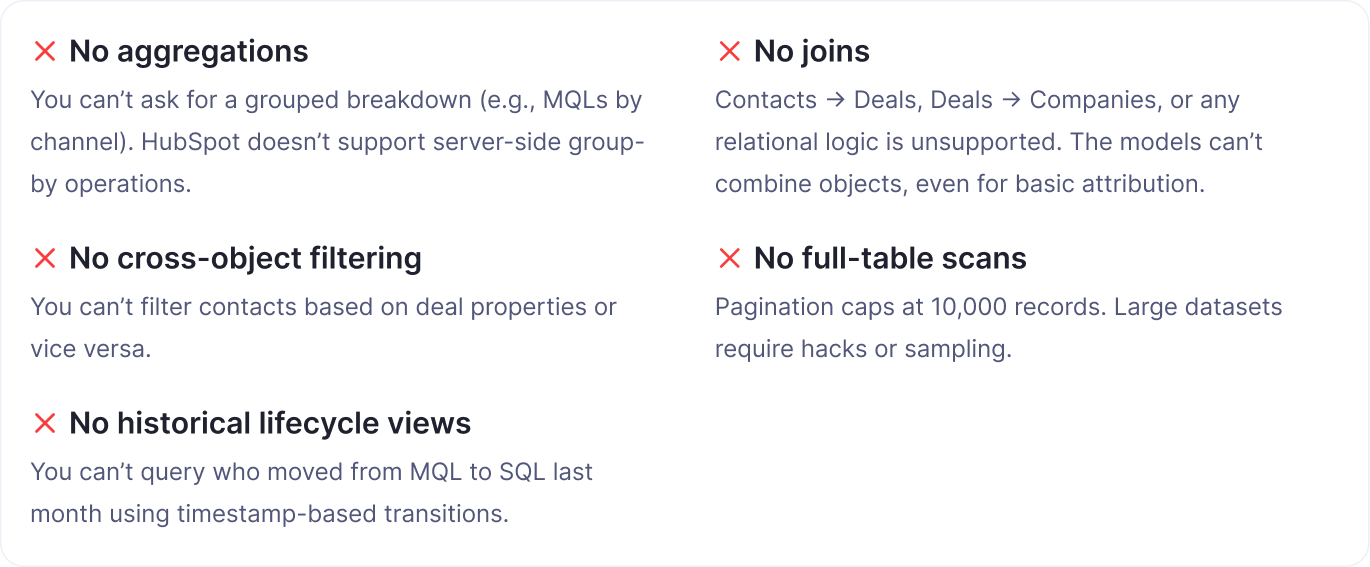

The deeper the questions went, the clearer the bottleneck became and it wasn’t the models. It was HubSpot’s MCP layer.

Under the hood, MCP is just a thin wrapper around HubSpot’s existing API, and that API doesn’t provide the operations needed for real analysis. Both Claude and ChatGPT ran into the same structural walls:

Once these limitations surfaced, the outcome became inevitable: the models weren’t failing. They simply didn’t have the data-access depth required to answer the questions teams actually care about.

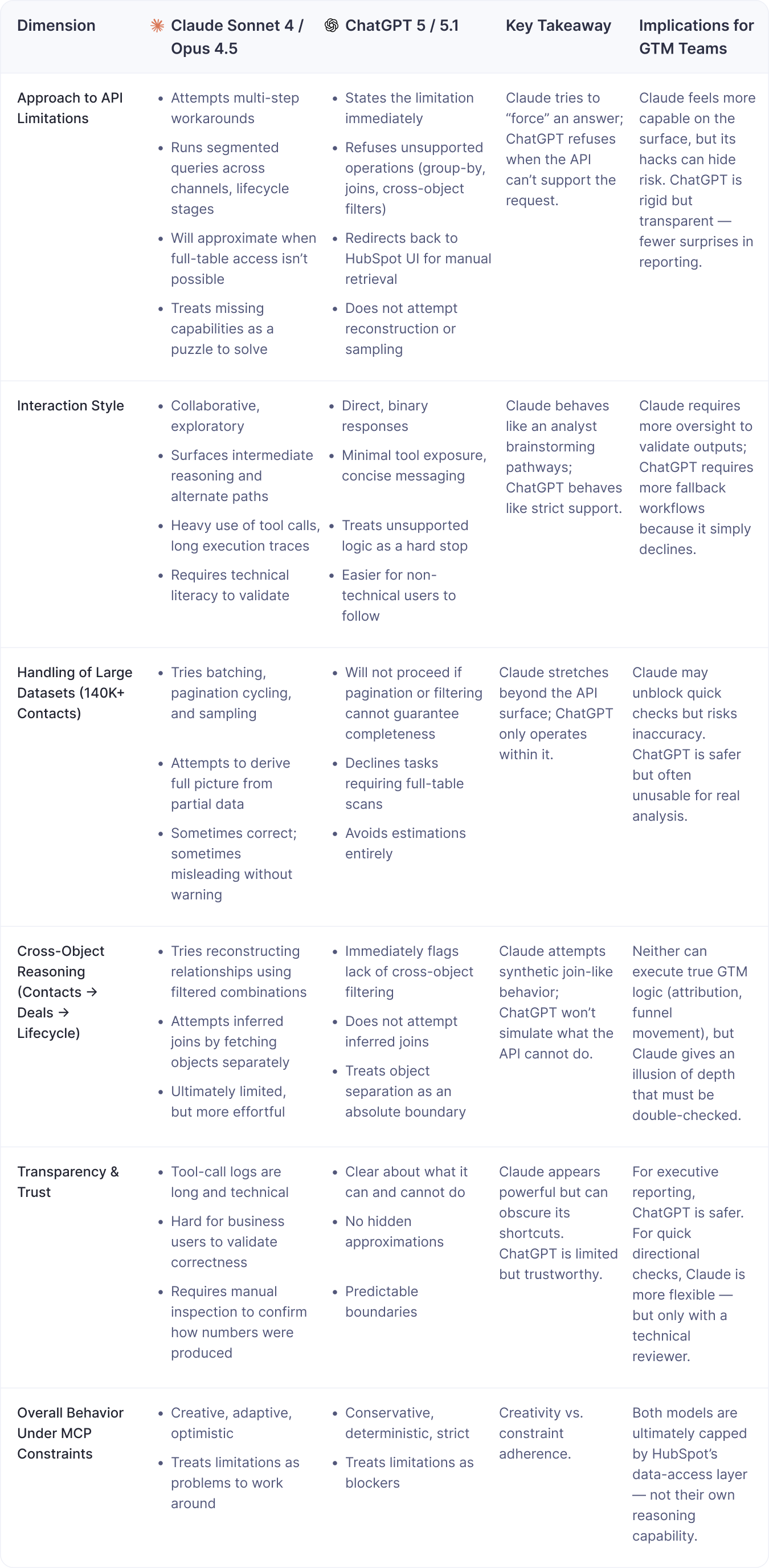

Claude vs ChatGPT with Hubspot MCP: How Each Behaves Under Constraint

This test wasn't the only one that we ran. In fact, we have been testing out the HubSpot MCP through Claude and ChatGPT, even with the older models. And these are the overall findings over the past few months that we have come across.

Claude vs ChatGPT on HubSpot MCP

The Verdict and Usability for Business Users

Even setting technical capability aside, the real question is whether a marketer, RevOps manager, or GTM leader could rely on MCP-powered AI in their daily workflow.

The short answer: not yet.

Claude’s output feels powerful but opaque.

- Its responses look polished, but the underlying steps are buried inside long tool-call chains that only a technical user can interpret.

- Without unpacking every call, you can’t tell whether the model fetched the entire dataset, stitched samples together, or approximated the result.

- That lack of clarity becomes a real risk when the numbers are going into team updates or executive reporting.

ChatGPT is clearer but offers less.

- Because it refuses unsupported operations, the experience feels more transparent.

- You know exactly when something isn’t possible.

- But that honesty also means you spend most of your time being redirected back to HubSpot’s own UI, which defeats the purpose of conversational analytics.

Both approaches reflect their philosophies, but neither can produce the kind of verified, cross-object analysis GTM teams rely on. The real limitation is the Hubspot MCP.

Neither model meaningfully improves a marketer’s daily workflow.

The questions MCP can answer are the same simple filters marketers already pull from HubSpot views.

Until the data-access layer through the Hubspot MCP is richer with joins, aggregations, timestamps, historical transitions, and full-table visibility, the MCP-LLM UI won’t replace the existing workflow or support real decision-making. It’s too limited to be trusted, and too shallow to be useful where it matters.