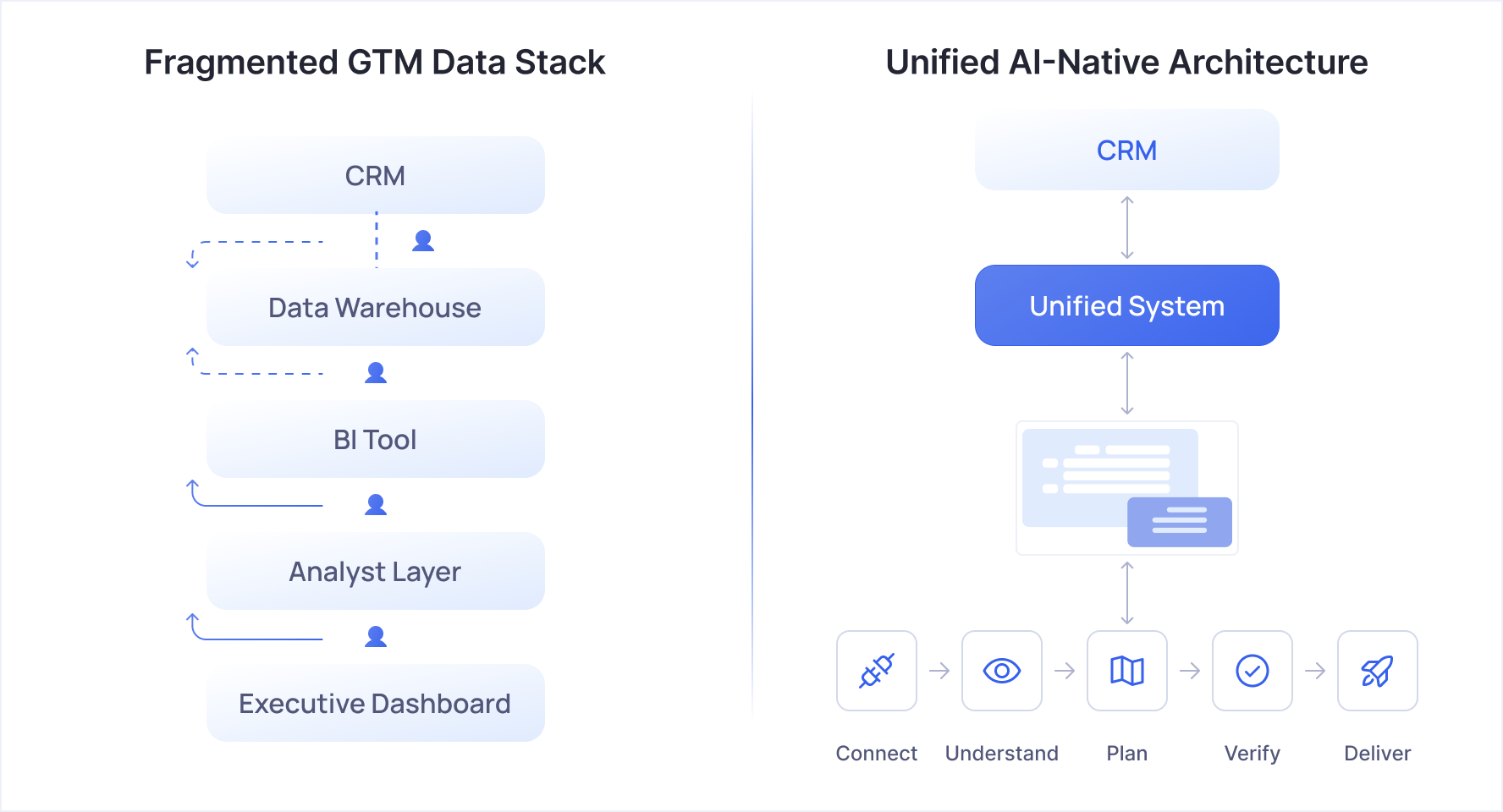

- Most go-to-market data stacks are still structurally broken in 2026 because data flows through too many disconnected tools, teams, and handoffs before it reaches decision-makers. No one person can defend every metric end-to-end.

- The root issue is architectural, not managerial. Better coordination or project management will not fix it.

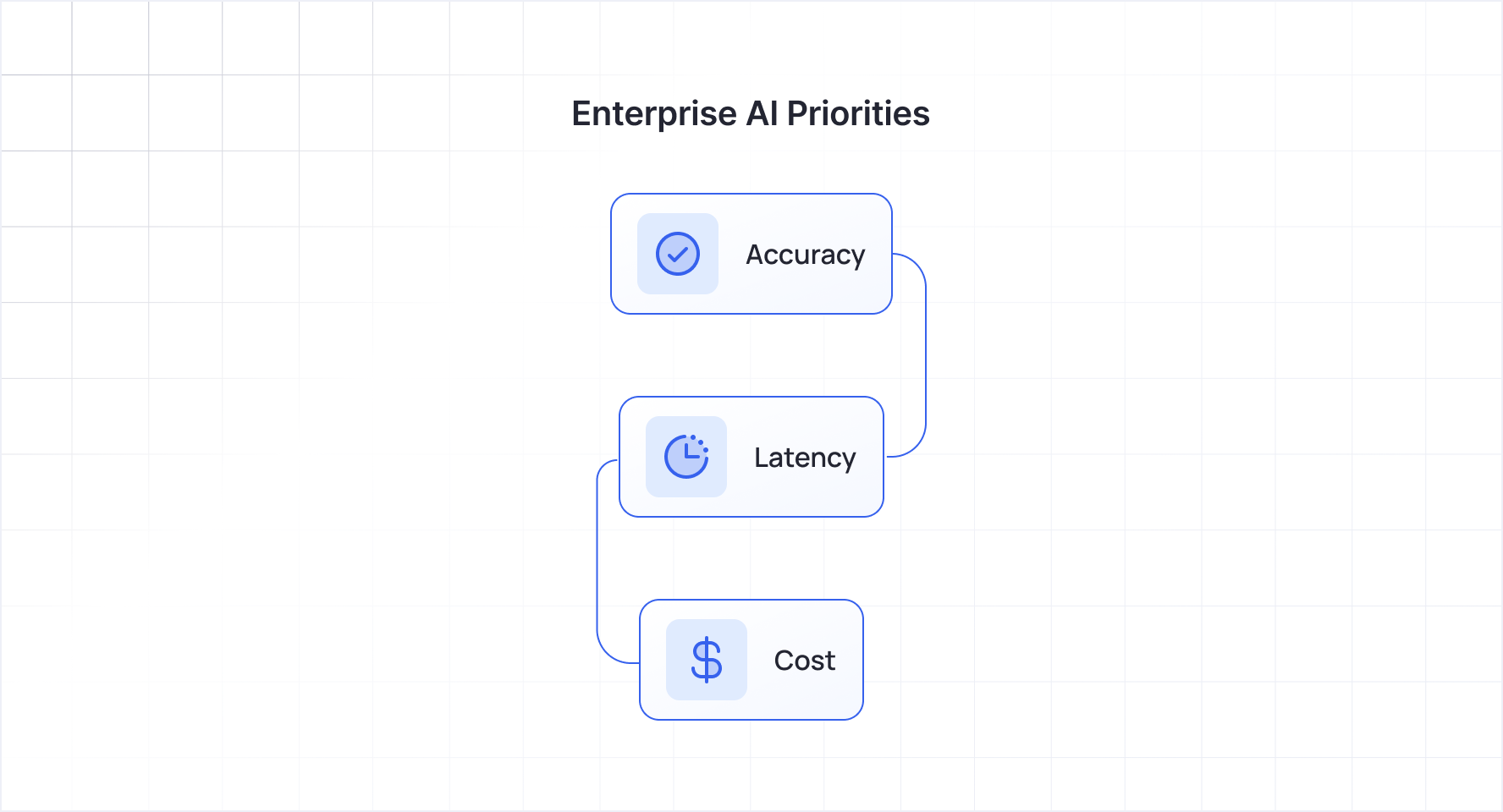

- Enterprise leaders need accurate and verifiable numbers, not just faster AI outputs. Accuracy must come first, then speed, then cost.

- Legacy analytics and CRM vendors add AI features but do not solve the core fragmentation beneath them.

- A unified system that maps business context to data, shows analysis plans before execution, and verifies results restores organizational trust in metrics.

Watch the full conversation below

I recently sat down with Jake Villanueva on his podcast to talk about what we're building at Petavue, why enterprise data teams are set up to fail, and what the big vendors aren't saying out loud. Here's what I shared and a few things I've been thinking about since.

I come from a family of priests. Not metaphorically, literally. About eighty percent of the men in my household are priests in the Hindu tradition. I grew up in Mumbai, half-trained for the role myself, in a conservative household where faith was the organizing principle of daily life.

It's the kind of background people don't expect from a tech CEO, but I keep drawing this comparison with my family. I tell them: we're in the same business. You give people hope about the almighty and I give people hope about a future that hasn't been built yet. When you're selling an enterprise product that asks people to rethink how they've done something for twenty years, you're asking for faith. You'd better be worthy of it.

What My Startup Acquisition Taught Me

Before Petavue, I co-founded a company that was acquired by ZoomInfo during COVID. I joined as VP of Engineering and helped scale the India operations from 15 to 500 people. But the path to that acquisition wasn't glamorous, by the time the offer materialized, I was fully broke. No assets, no savings, nothing.

The hardest part of getting acquired isn't the deal, it's making the decision. You receive the offer because you're doing well. Which means there's a road map to grow. So you're staring at the most fundamental question: do you bet on the much bigger outcome, or do you take this exit, knowing that what comes after is completely unclear?

My rubric, and the advice I still give founders who ask: you have to be selfish. The founders and their immediate families are the ones who've borne the capital risk, the time risk, the health risk. The core decision has to come down to what they want. You layer in other considerations; we set aside a pool of money for team members who hadn't even vested stock yet, but the foundation has to be founder alignment.

At ZoomInfo, I learned how differently large companies operate.

In a startup, everyone is aligned on a single outcome: the company has to succeed. People literally resign if they feel they're not adding value. In a large public company, people have split priorities of success, but also position, salary, territory.

I went in with my startup mentality, told the CEO "if you fire me, so be it," and pushed hard until the day I left. But understanding those different incentive structures changed how I think about building organizations.

The Structural Problem in GTM Data Nobody Talks About

Data teams are one of the most inefficient teams in an enterprise. That's the conviction I developed at ZoomInfo, and it's the reason Petavue exists.

Here's a simple example. By the time a metric or report reaches an executive in a board meeting, four or five people have touched it — a data engineer bringing data together, an analyst building metrics, a business person layering on insights. They're spread across different teams using different tools. By the time the insight reaches the executive, no single person can explain how it was produced.

That's not a people problem. It's architectural. The data stack is deeply technical, so the people who calculate metrics are engineers, but they lack business context. The context comes from someone else. There's always a communication gap. Everything becomes slow, fragile, and impossible to audit.

If you think it's a coordination problem, you'll try better project management. If you recognize it's an architecture problem, you rethink the architecture. That's what we chose to do.

The three co-founders of Petavue, all with deep data backgrounds from ZoomInfo, chose this problem for a specific reason: AI is far more effective in domains where results are objective and verifiable. Math is not subjective. It's either right or wrong. You can defend the answer.

Why I Refuse to Compromise on Accuracy

A dangerous narrative is taking hold in the AI world, and I want to push back on it directly.

At a recent Bay Area event, the CEO of a scaled-up AI startup told the room that we're living in a world of non-determinism now. Everyone should get used to results not being accurate all the time. I sat there thinking: nothing could be further from the truth.

People will not accept inaccurate results just because AI makes things faster. Executives still expect numbers they can run their business on. They're not explicitly saying "don't come to me with bad data" but that's exactly what they expect. It's on us as builders to understand that. LLMs get you eighty percent of the way there. The real work, and the real opportunity, is the last twenty percent to full accuracy.

At Petavue, our internal priority stack is: accuracy first, latency second, cost third. Until you solve accuracy, don't optimize for anything else.

This shapes everything about how the product works

Connect → Understand → Plan → Verify → Deliver. You plug in your systems: Salesforce, HubSpot, a data warehouse, whatever your stack looks like. Petavue instantly builds a semantic layer that maps your data to your business context in plain English.

When you run an analysis, the system presents a plan first — here's how I'll bring these data sources together, here are the calculations. You review, approve, and our verification layers guarantee the execution is accurate. Ten minutes, start to finish.

One customer told us what used to take two days now takes twenty minutes. Another, asked to describe the value during a pilot, was almost offended by the question: "How can you even ask that?"

The real benefit is renewed organizational trust. Because everything happens in one system with a transparent record of how every insight was derived, you eliminate the chronic problem of debating whether numbers are right.

Today, most data team effort goes toward ensuring you report correct numbers to executives. Anyone below VP level gets nothing, they're operating on intuition. That's the gap we're closing.

What the Big Vendors Won't Say Out Loud

At Dreamforce, someone in the audience stood up, in a room full of Salesforce executives promoting AgentForce and said: we want breakthroughs, not piecemeal AI. That took courage. And it captured something I hear constantly from people inside public companies, beyond the press releases.

There is a real adoption challenge. We've oversimplified AI. We've sold too much of a dream.

Here's what Tableau, PowerBI, Looker, and Salesforce Einstein are actually doing: basic filtering and explanation at the top layer. You still clean data in one tool, compute metrics in another, visualize in a third. The AI sits on top, making the last mile slightly faster, without touching the structural problem underneath.

If you don't consolidate vertically, bringing the entire pipeline into one system, the productivity gains don't materialize. The full visibility into how numbers are calculated never happens. People see through piecemeal AI quickly.

The legacy vendors would need to rearchitect their entire platforms to compete. The innovator's dilemma is real, and if there's anything I'm confident about, it's that they can't pull it off.

Here's the irony: Tableau's original pitch twenty years ago was exactly the same promise — free business teams from dependency on IT. Twenty years and billions of dollars later, we're solving the same problem. That tells you everything about the ceiling of the existing approach.

The Human-in-the-Loop Reality

If you're doing AI in enterprise without humans-in-the-loop, it's not going to work. I don't think most companies have realized this yet.

It's a chicken-and-egg problem. You want your AI product to work perfectly out of the box. But you can't get there without real customers revealing the edge cases.

This isn't ChatGPT, where you'll forgive a $20 tool. Enterprise customers paying ten or thirty thousand dollars expect it to work every time.

What the Next Five Years Look Like

Consolidation before everything else. You cannot realize AI productivity gains with data split across three or four tools. AI-native CRMs are already collapsing five tools into one. The same wave is coming for the data stack.

The analyst role survives but the expectations explode. Some companies will cut headcount. Smarter ones will keep the same team and demand ten times the output. If you're choosing your next role, ask the company: do they see AI as a job killer or a ten-times multiplier? That answer tells you everything.

At Petavue, we're heading into 2026 building three things:

Agentic dashboards: because creating a dashboard is ten percent of the work and maintaining it is ninety percent. We've solved creation; now we're automating maintenance.

Personalized insights for everyone: not just the executive layer, but every person in the company who makes decisions.

Uncompromising accuracy: because people are already second-guessing their metrics. They don't need another layer of doubt.

This piece was inspired by my conversation with Jake Villanueva. If the problems I've described sound familiar, if your team spends more time debating numbers than acting on them, I'd love to talk. Find us at petavue.com or reach out to me on LinkedIn.