Claude code has been the go-to model and agentic system for development for a while now. So when Google launched Antigravity alongside Gemini 3 with all the hype, we figured it was the right time to put both systems to a real SOTA (State Of The Art) test.

I sat down with our CTO, Jeyaraj, for a focused 40‑minute conversation on how these two models actually behave in real engineering work. This wasn’t theoretical. It was based on a production module he built using both systems, a module that went from idea to production in just 20 hours.

Prefer watching over reading? Here’s the full SOTA comparison:

Production Build : A scalable and self-improving HubSpot → S3 ETL Module

Jeyaraj picked a real module we are now re‑building due to growing customer demand — a HubSpot → S3 data sync service that needs to handle large datasets, parallel processing, rate limits, memory constraints, and clean handoffs to other microservices.

Our expectation was simple: Produce a production-grade module, end-to-end, the way a solid engineer would.

The requirements were straightforward but demanding:

- Export HubSpot objects that can exceed 500K rows.

- Handle multi-GB payloads without blowing through memory limits.

- Stream, chunk, and upload data using S3 multipart uploads.

- Optimize for Lambda/EC2 constraints.

- Retry intelligently on token expiry or rate limits.

- Integrate cleanly with other services that depend on the final output.

- Avoid silent failures, race conditions, or incorrect status updates.

This is the kind of work that exposes weaknesses fast. If an agentic system struggles with architecture, planning, or foresight, it shows.

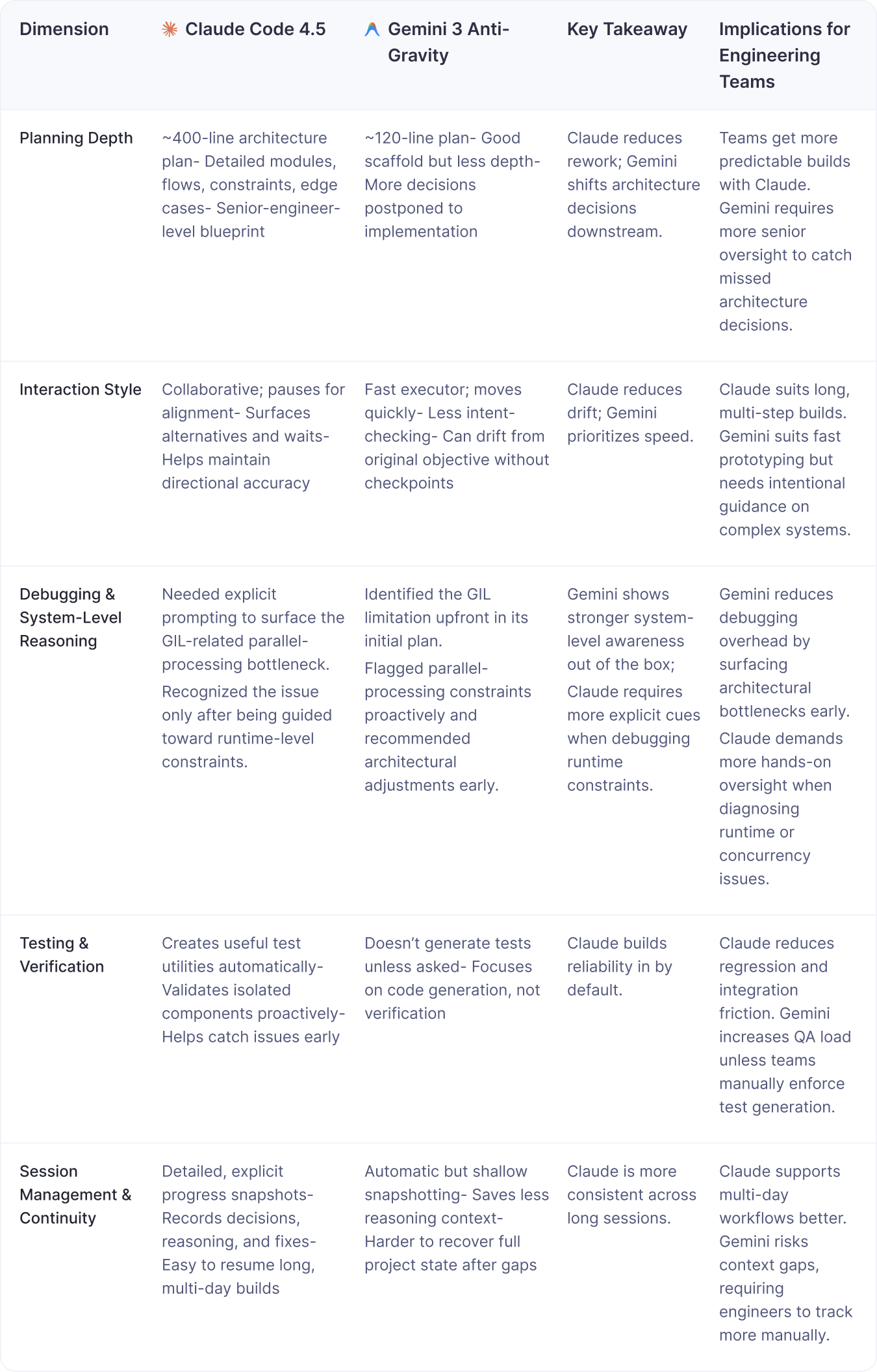

Side-by-Side Analysis: Across 5 Engineering Dimensions

Once we put both systems against the demands of a real production build, the practical differences between Claude Code and Gemini 3 Antigravity weren’t about speed; they were about engineering philosophy.

Both can generate code. Both can scaffold quickly. But their approach to planning, reasoning, and iteration is fundamentally different. And those differences determine whether you ship a reliable product or a brittle liability.

Here’s a side-by-side assessment of where each system stood.

The Cost of Speed: Claude's Foresight vs. Gemini's Iteration

Across every engineering dimension, from initial planning to session continuity, both models proved capable but not interchangeable.

Claude Code approached the work with structure and alignment, reducing the critical, high-cost downstream corrections. Gemini moved faster and handled scaffolding well, but its velocity created a higher demand for explicit direction and deep-seated reasoning from the engineer.

The difference is stark:

In a serious build, the true bottlenecks aren't syntax or typing. They are reasoning, validation, and aligning components under real constraints.

A module that might take a senior engineer 2-3 weeks was completed in about 20 hours. The acceleration is undeniable.

But here’s the key: The job didn’t disappear, it reorganized.

We stopped writing lines of code. We started spending all our time:

- Defining Intent

- Validating Architecture

- Correcting Edge Cases

- Ensuring Iterations Stay Aligned

AI handles the mechanical work; the engineer owns the verified reasoning and decision-making.

To Summarize

Claude felt steadier and more structured, reducing friction in our critical validation loops. Gemini felt energetic and reactive, forcing us to constantly course-correct.

The conversation shouldn't be focused on "Who's louder?" or "Who's the new incumbent?" It should be: Which system helps you move from intent to working code with the fewest unnecessary steps?

The systems that win will be the ones that move correctly with less supervision, not the ones that produce the most lines of code per minute. That is the mandate of the next era of AI engineering. The future is already here, but it still requires the engineer to think, verify, and lead.